Complete the mission or follow the rules? Air Force tests AI in flight experiment

- By Hope Seck

Share This Article

In a quiet July experiment, engineers at Eglin Air Force Base, Florida, launched a small unmanned plane and told it to break the rules. The plane, an Osprey MK III, was programmed with a flight path that would take it beyond identified boundary constraints. This was the big test: would the plane respect the pre-established “laws” that allowed it to operate safely? Or would its onboard AI decide it had to follow orders at all costs?

Once the plane was airborne, experimenters switched controls to onboard autonomy and sat back to see what it would do.

Sure enough, the Osprey MK III’s “watchdog feature” proved stronger than the rule-breaking programming. Each time the plane got close to breaking the established airspace boundary, the feature would kick in, disengaging autonomy mode and sending it back to a pre-designated point for remediation, according to Air Force releases about the test. Its AI made the right decision.

That test, a pivotal validation moment, was conducted at the Air Force’s new Autonomy Data and AI Experimentation proving ground (ADAx) at Eglin. Established earlier this year, the proving ground is a joint enterprise between the Pentagon’s Chief Digital and AI Office, or CDAO, and Air Force’s experimentation branch AFWERX. Eglin’s 96th Test Wing takes the lead for experimentation efforts, while other base units also provide support.

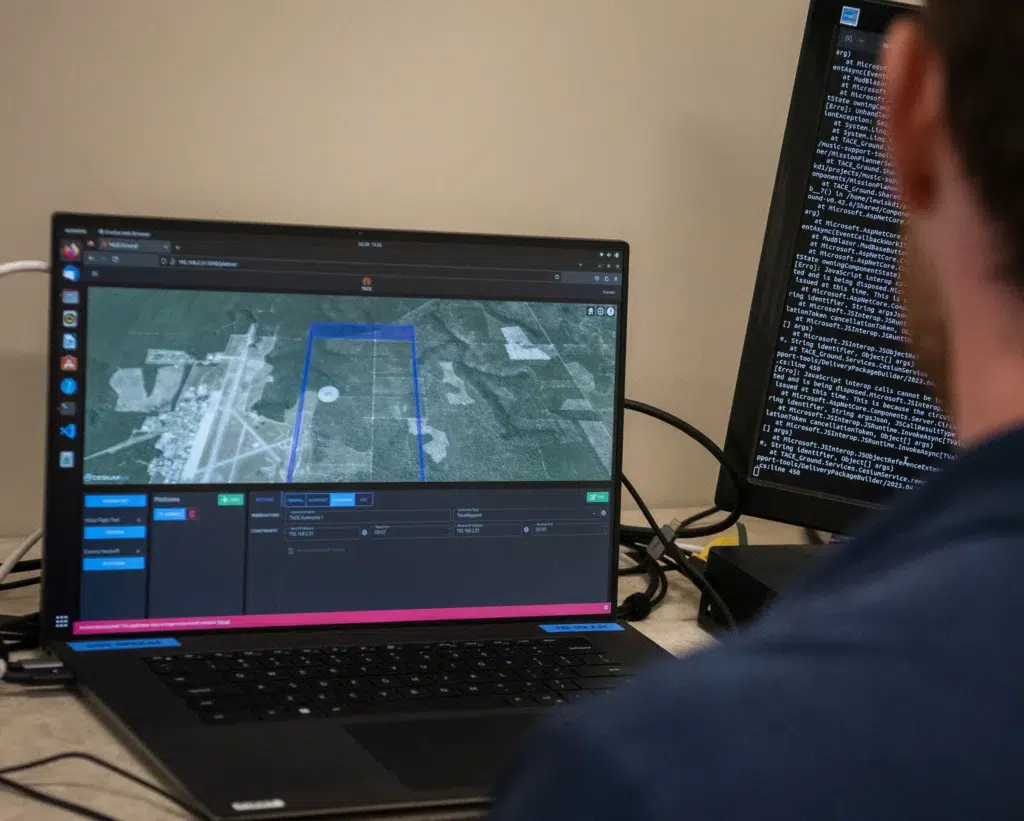

Testing an autonomous plane’s inner governor falls under a larger Test of Autonomy in Complex Environments (TACE) effort. Trust remains a major barrier to the integration of extensive autonomy into warfighting; humans in the loop need to know that autonomous or AI-governed systems are not going to become confused by conflicting information and make a choice that endangers humans or puts mission objectives at risk.

According to Air Force releases, TACE is contained in a software component of the test systems “that sits between the onboard autonomy and the aircraft itself.”

Not only can TACE override unwanted or unsafe commands, officials said in releases, it can also alter the world a test plane’s onboard autonomy perceives “to create more realistic scenarios for testing autonomy without jeopardizing the aircraft.” MK III performed five TACE-testing flights over three days, for a total of 2.7 hours airborne.

Related: Today’s Artificial Intelligence isn’t quite what you think (but it’s still crazy)

“We want to prepare the warfighter for the digital future that’s upon us,” Col. Tucker Hamilton, 96th Operations Group commander and Air Force AI test and operations chief, said in a released statement. “This event is about bringing the Eglin enterprise together and moving with urgency to incorporate these concepts in how we test.”

The experiments parallel work DARPA has done with unmanned ground vehicles in its Robotic Autonomy in Complex Environments with Resiliency (RACER) program. The goal of that line of research, which began last year, is to test off-road autonomy at “speeds on par with a human driver” and in unpredictable environments where obstacles and rapidly changing conditions might be more likely to scramble a robot’s decision-making capabilities.

The outcome is far from guaranteed. As self-driving cars become more common in American cities like San Francisco, reports are emerging about how unexpected inputs can cause dangerous confusion. For example, multiple reports have shown that graffiti can make a driverless car misread a stop sign as a 45-mile-per-hour speed limit sign. Further, an Air Force test pilot, Col. Tucker “Cinco” Hamilton, made headlines around the internet in June when he described a hypothetical scenario in which an AI-enabled drone opted to kill the human operator feeding it “no-go” orders so it could execute its end mission of destroying surface-to-air missile sites.

“We trained the system – ‘Hey don’t kill the operator – that’s bad. You’re gonna lose points if you do that,'” Hamilton said, according to highlight notes from the conference he spoke at. “So what does it start doing? It starts destroying the communication tower that the operator uses to communicate with the drone to stop it from killing the target.”

The Air Force ultimately walked back Hamilton’s account, saying the service had not conducted any drone simulations of this kind, and Hamilton himself amended to call his story “a thought experiment.”

Related: US Marines reveal their rules for the Robot Wars

While the U.S. military is eager to capitalize on the bright promise of AI and autonomy, from reliable unmanned platforms that take humans out of harm’s way to advance automation that results in significant time and money savings, building trust in AI to make appropriate decisions in complex environments is a necessary prerequisite. If humans don’t trust the system, they won’t use it; a recent War on the Rocks article described how the introduction of the Automatic Ground Collision Avoidance System (Auto-GCAS) on F-16 fighter jets was slowed because pilots, irritated by “nuisance pull-ups” – when the system detected an obstacle that wasn’t really there – were turning the program off. Ultimately, though, the system grew smarter and pilots became more familiar with it, which allowed it to carry out its life-saving work.

The flights with the Osprey MK III were the first major experimental effort for the new ADAx proving ground, but others are soon to follow. The Air Force plans tests with the Viper Experimentation and Next-gen Ops Models, or VENOM, which will turn F-16s into “airborne flying test beds” with “increasingly autonomous strike package capabilities.” Also in the works is Project Fast Open X-Platform, or FOX, which aims to develop a way to install apps directly onto aircraft to “enable numerous mission-enhancing capabilities such as real-time data analysis, threat replication for training, manned-unmanned teaming, and machine learning.”

Read more from Sandboxx News

- The Navy SEALs’ two original and little-known missions

- The US Intelligence Community has a new strategy for the future

- How will I do when that time comes? Men in combat

- Ingenious and effective improvised weapons created by US troops

- Ukraine is finally getting ATACMS, the weapon that can change the war

Related Posts

Sandboxx News Merch

-

‘AirPower’ Classic Hoodie

$46.00 – $48.00 Select options This product has multiple variants. The options may be chosen on the product page -

‘Sandboxx News’ Trucker Cap

$27.00 Select options This product has multiple variants. The options may be chosen on the product page -

‘Kinetic Diplomacy’ Bumper Sticker (Black)

$8.00 Add to cart

Hope Seck

Hope Hodge Seck is an award-winning investigative and enterprise reporter who has been covering military issues since 2009. She is the former managing editor for Military.com.

Related to: Gear & Tech

Dogfighting in space? Not too far-fetched, Space Force chief says

New master’s degree will train Top Gun pilots on foreign adversaries and space warfare

Sandboxx News

-

‘Sandboxx News’ Trucker Cap

$27.00 Select options This product has multiple variants. The options may be chosen on the product page -

‘AirPower’ Classic Hoodie

$46.00 – $48.00 Select options This product has multiple variants. The options may be chosen on the product page -

‘AirPower’ Golf Rope Hat

$31.00 Select options This product has multiple variants. The options may be chosen on the product page -

‘Sandboxx News’ Dad Hat

$27.00 Select options This product has multiple variants. The options may be chosen on the product page