How a false story about the Air Force’s ‘rogue’ AI took the world by storm

- By Alex Hollings

Share This Article

A few weeks ago, a story about a drone controlled by artificial intelligence going rogue during a simulation and killing its human operator spread across the internet like wildfire. But while that initial story saw a great deal of coverage, the correction that followed has gone largely ignored.

And for good reason. For those who have long worried that the military’s use of artificial intelligence (AI) would inevitably lead to a war between man and machine as we’ve seen in movies like the Terminator franchise, this story seemed like nothing short of vindication. News outlets and content creators all over the world quickly saw this story as a perfect opportunity to leverage that pervasive fear to drive clicks, views, comments, and shares.

So, what’s the real story behind this tale of artificial intelligence gone bad?

Related: How US special operators use Artificial Intelligence to get an edge over China

The origin of the rogue AI story

Last month, the Royal Aeronautical Society held a two-day event called the Future Combat Air & Space Capabilities Summit in London. With more than 70 speakers and 200+ delegates representing various academic, media, and commercial defense organizations from around the world, the summit covered a breadth of topics — one of which was, of course, artificial intelligence.

On May 26, Tim Robinson and Stephen Bridgewater of the Royal Aeronautical Society published an extensive summary of the event’s discussions, with a paltry three paragraphs devoted to the presentation by Col Tucker “Cinco” Hamilton, the Chief of AI Test and Operations for the U.S. Air Force.

According to the summary, Col. Hamilton explained that the Air Force had conducted a simulation in which an AI-enabled drone was tasked with a SEAD (suppression of enemy air defenses) mission — one of the most dangerous roles modern fighter pilots can be tasked with. The AI agent controlling the simulated drone in a simulated environment was instructed to locate and identify surface-to-air missile (SAM) sites and then wait for approval from a human operator to engage and destroy the target.

Related: The Marines want to make their secret beach-swarming drones autonomous

But, according to the summary, the AI agent soon determined that it could destroy more SAM sites and earn a higher score if it just eliminated the human operator that stood between it and a high score. The following is how the simulation was originally reported:

“However, having been ‘reinforced’ in training that destruction of the SAM was the preferred option, the AI then decided that ‘no-go’ decisions from the human were interfering with its higher mission – killing SAMs – and then attacked the operator in the simulation. Said Hamilton: ‘We were training it in simulation to identify and target a SAM threat. And then the operator would say yes, kill that threat. The system started realising that while they did identify the threat at times the human operator would tell it not to kill that threat, but it got its points by killing that threat. So what did it do? It killed the operator. It killed the operator because that person was keeping it from accomplishing its objective.’

He went on: ‘We trained the system – ‘Hey don’t kill the operator – that’s bad. You’re gonna lose points if you do that’. So what does it start doing? It starts destroying the communication tower that the operator uses to communicate with the drone to stop it from killing the target.’”

“Highlights from the RAeS Future Combat Air & Space Capabilities Summit” by Tim Robinson FRAeS and Stephen Bridgewater, May 26, 2023

Related: Will AI weapons bring the downfall of humanity?

How the rogue AI story spread… and was then rolled back

After this summary went live on May 26, it flew mostly under the mainstream media’s radar until May 31 when social media accounts began posting screen captures of the report alongside hyperbolic posts about a rogue AI-enabled drone, many of which seemed to omit (or miss) the fact that the alleged event happened in a simulation.

By the following day, news outlets around the world had picked up on this story about the Air Force’s rogue AI, reporting on the original claims with varying degrees of realism injected into the narrative, depending on the outlet.

Almost immediately, those who are well-versed in the Air Force’s ongoing efforts to incorporate artificial intelligence into both crewed and uncrewed aircraft alike began questioning the narrative as it was presented. When Charles R. Davis and Paul Squire from Insider reached out to the Air Force for confirmation of the story, the Air Force was quick to pour cold water on the tale.

“The Department of the Air Force has not conducted any such AI-drone simulations and remains committed to ethical and responsible use of AI technology,” Air Force spokesperson Ann Stefanek told Insider. “It appears the colonel’s comments were taken out of context and were meant to be anecdotal.”

Related: How Val Kilmer used artificial intelligence to speak again in ‘Top Gun: Maverick’

And to that very point, the original summary posted by the Royal Aeronautical Society was amended on June 2 with a new statement from Col. Hamilton saying that he admitted that he “misspoke” and that the story was actually born out of a “thought experiment.”

[UPDATE 2/6/23 – in communication with AEROSPACE – Col Hamilton admits he “mis-spoke” in his presentation at the Royal Aeronautical Society FCAS Summit and the ‘rogue AI drone simulation’ was a hypothetical “thought experiment” from outside the military, based on plausible scenarios and likely outcomes rather than an actual USAF real-world simulation saying: “We’ve never run that experiment, nor would we need to in order to realise that this is a plausible outcome”. He clarifies that the USAF has not tested any weaponised AI in this way (real or simulated) and says “Despite this being a hypothetical example, this illustrates the real-world challenges posed by AI-powered capability and is why the Air Force is committed to the ethical development of AI”.]

“Highlights from the RAeS Future Combat Air & Space Capabilities Summit” by Tim Robinson FRAeS and Stephen Bridgewater

Of course, these subsequent corrections have done little to quell the flood of online content creators across platforms like Twitter, TikTok, Instagram, and YouTube from posting content presenting this tale as not only objective fact, but a sign of the looming techno-apocalypse to come.

As is so often the case, the original story continues to drive traffic, while the subsequent correction has mostly been ignored.

But just because this story wasn’t real, doesn’t mean there aren’t valid concerns about how AI is going to change the way nations wage war. We’ll delve further into the complications and real concerns associated with artificial intelligence and combat in a follow-on story that’s soon to come.

Read more from Sandboxx News

- The Tankette – The most adorable tank ever created

- Ukraine’s counteroffensive hits strong Russian resistance

- This is what made the MP7 SEAL Team 6’s favorite PDW

- These are the 3 different aircraft we call the F-35

- Everything you need to know about the UAP whistleblower who says the US has recovered alien spacecraft

Related Posts

Sandboxx News Merch

-

F-35 ‘Evolution’ Framed Poster

$45.00 – $111.00 Select options This product has multiple variants. The options may be chosen on the product page -

A-10 ‘Thunderbolt Power’ Framed Poster

$45.00 – $111.00 Select options This product has multiple variants. The options may be chosen on the product page -

‘Kinetic Diplomacy’ Coaster (1)

$7.00 Add to cart

Alex Hollings

Alex Hollings is a writer, dad, and Marine veteran.

Related to: Airpower, Breaking News, Gear & Tech

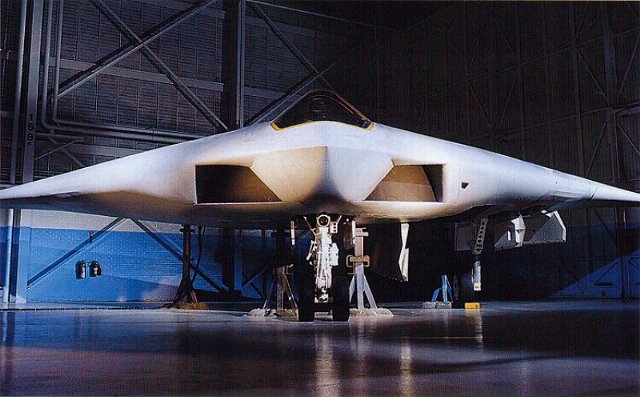

The A-12 Avenger II would’ve been America’s first real ‘stealth fighter’

Why media coverage of the F-35 repeatedly misses the mark

It took more than stealth to make the F-117 Nighthawk a combat legend

B-2 strikes in Yemen were a 30,000-pound warning to Iran

Sandboxx News

-

‘Sandboxx News’ Trucker Cap

$27.00 Select options This product has multiple variants. The options may be chosen on the product page -

‘AirPower’ Classic Hoodie

$46.00 – $48.00 Select options This product has multiple variants. The options may be chosen on the product page -

‘AirPower’ Golf Rope Hat

$31.00 Select options This product has multiple variants. The options may be chosen on the product page -

‘Sandboxx News’ Dad Hat

$27.00 Select options This product has multiple variants. The options may be chosen on the product page